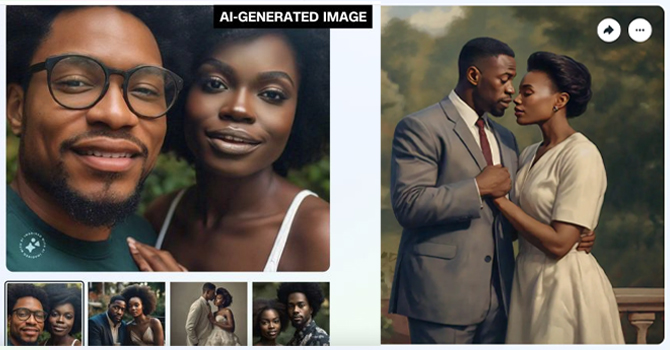

The incapacity of Meta’s image generator to accurately represent friendships and couples of mixed races is facing criticism. Writer Mia Sato explained her vexing experiences attempting to use Meta’s generator to produce realistic images of a mixed-race couple in a recent report by The Verge.

Using prompts such as “Asian man and white wife” or “Asian man and Caucasian woman on wedding day,” Sato tried to create images of an East Asian man and a Caucasian woman. Instead, the generator continuously produced images of two Asian people. It only gave the right image once, and that was of an older man holding a younger, lighter-skinned Asian woman. The recent uproar surrounding Google Gemini stands in stark contrast to this issue with Meta’s image generator. Google’s chatbot Gemini was discovered to be showing historically false and offensive imagery like black US founding fathers, Asian Nazi soldiers, and female Catholic popes. Google quickly apologized to the public and stopped allowing Gemini to generate people.

Sato criticized the AI system for restricting imagination under societal prejudices and called Meta’s outcomes “egregious.” The generator continued to promote prejudices and preconceptions even after making changes to the text prompts. It continuously produced images of Asian couples, even when asked to depict platonic relationships. Moreover, Meta’s bot appeared to support prejudices related to skin tone. It displayed faces with light complexions that looked East Asian when the prompt “Asian woman” was entered. In addition, it included cultural attire—like a sari or bindi—even when it wasn’t asked for. The bot was also able to combine elements of different Asian cultures, as seen in the image of a pair dressed in a mix of Japanese and Chinese clothing.

The AI system frequently produced pictures of older Asian males with younger-looking Asian women, which seemed to confirm its age-related prejudices. The diverse Asian population and the reality of mixed-race marriages are not adequately reflected in these data. The prejudices of its developers and trainers, as well as the data it is trained on, will reflect in the AI technology. Numerous Twitter users drew attention to Meta’s image generator’s shortcomings, highlighting how crucial it is to remove biases in AI systems.

It is important to take into account that 17% of weddings in the US are between people of various racial or ethnic backgrounds, indicating that mixed-race marriage is not a recent occurrence. Three out of ten newlywed Asian couples have a partner who is not from their own race or ethnicity. In spite of this, Asians are frequently portrayed in Western media as a homogeneous group, with little consideration for their variety and cultural distinctions.

Improved diversity and inclusion in AI systems are crucial, as demonstrated by Meta’s challenges in properly portraying friends and couples of mixed races. Artificial Intelligence therefore must represent the realities of our varied society and refrain from reinforcing negative preconceptions and biases as it continues to transform our digital world.

- Code Smarter, Not Harder: 5 Free AI Tools You Need to Know! - January 22, 2025

- Meta Takes on CapCut with Its New Video Editing App ‘Edits’ - January 20, 2025

- NovaSky Debuts Affordable Open-Source AI Model for Advanced Reasoning - January 14, 2025